About 5 years ago I was introduced to Flyway, a tool for managing schema migrations using simple SQL scripts. I've used it on several projects now, and have more recently been introduced to Liquibase. The two tools both solve similar problems, with slightly different approaches. The main purpose of these tools is to version changes to the schema of the databasse.

Liquibase has a good list of best practices for that particular tool that work well for Flyway. I have a few of my own that I've learned through the years.

These are primarily tools for managing the schema, not data

If you find yourself using these tools to regularly insert 1000's of records, you might be using the wrong tool for the job. Yes, these tools can do that, but maybe you need a ETL tool or a bulk import API in your application.

One team I have worked on has regularly had multi-megabyte files full of INSERT statements to load data every few months. Liquibase does make this a little bit easier because it has built-in support for loading delimited files, and Flyway can be extended to do the same (which I did on one occasion). And in this instance, this was being used to load some read-only metadata into the system because nobody had invested in a better way to do so. Since the migrations were stored in the same git repository as the application, this meant that pull requests would have these large files, which were usually just automatically merged (who is going to read a million rows of insert statements?). And, bootstrapping a database for local testing, or for CI/CD, would take a lot longer than it should have because these large scripts would have to be run. Admittedly Flyway and Liquibase also have ways to only run certain scripts in certain enviornments, which would have been helpful for this team, but it was the wrong solution for the underlying problem.

There may be some data that makes sense to load via migrations. If you are building a database that has some core lookup tables used to enforce referential integrity that are infrequently updated, this might be appropriate. For example, a database with an address table that has a column for US states, pre-loading the 50 states would be reasonable; when Puerto Rico becomes a state, or you choose to add support for US territories, adding a few more rows is not a big deal. But, if you want to provide ZipCode lookup and want to load roughly 40,000 records, plus the 20 or so that change every year, schema migrations might not be the tool for that (and let's not even get into the nearly million of Canadian postal codes).

Use repeatable migrations when necessary

Both Flyway and Liquibase support re-running "repeatable" migrations when their checksums change. These usually are run after all of the versioned migrations have been run. I have found these most helpful when working with stored procedures and materialized views.

Name your objects and use IF [NOT] EXISTS

Depending on the database, you may not be required to give constraints, indexes or foreign keys names. The database may generate these for you. This can prove to be problematic if a DBA has added an index in production, and then you backfill this with a migration. The best case is you give it a different name and you end up with duplicate indexes, the worst case is you attempt to create something that already exists and the migration fails. If supported, use the IF NOT EXISTS clause, or write a wrapper that does something similar.

Consider occasionally cleaning up the migrations

I admittedly have only done this on one team, but it proved to be valuable in our use case. Early migrations had been used to load some seed data into the database that was used mainly in dev/test environments. Over time, new migrations built on that seed data, moving some of it to different tables, or applying some update statements, and deleting some of it. By rebasing, we greatly reduced the number of scripts that had to run every time the database was bootstrapped for test purposes, and cleaned the codebase of some references to structures that no longer existed in production. Combined with switching to some more optimal commands (Liquibase is extremely slow at loading even a few rows of data if it has to query the database for column types, so adding the type to the column elements greatly improved this speed), we took bootstrapping our local and CI databases from 4 minutes down to less than 1 minute.

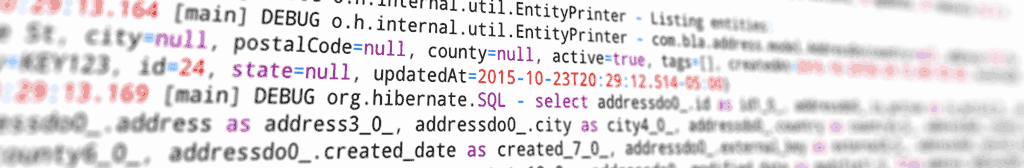

Here is an example of what that might look like:

-- Migration 1 : Create a table

CREATE TABLE t (

id int,

col1 varchar(255),

col2 varchar(255)

);

-- Migration 1a : Seed some data

INSERT INTO t ...

-- Migration 2 : Add a column

ALTER TABLE t

ADD [COLUMN] col3 varchar(255);

-- Migration 3 : Drop a column

ALTER TABLE t

DROP COLUMN col2;

-- Migration 4 : Add some more seed data

INSERT INTO t ...

-- After "rebase":

CREATE TABLE t (

id int,

col1 varchar(255),

col3 varchar(255)

);

INSERT INTO t ... (combine migrations 1a and 4)This does take some time: we did it as part of the regular cadence of spending some time refactoring the codebase. It might be useful to do every few years if the cost of startup time for test environments has gotten very slow. Each tool has a different way to do this in production, but my experience with Flyway it involves doing an out-of-band deploy to production that drops the history table, and then uses the baseline command to say "this database is already at version X, just assume that all migrations up through X have been run".

Migrate Slowly, Avoid Rollbacks

The paid commercial extensions for Flyway and Liquibase add support for rolling back migrations. Rails Active Record migrations also support this functionality. The idea is if you need to undo a migration, you can supply the code to do that at migration creation time. Not every migration can be easily rolled back, at least not without some careful planning. If you decide to drop a table and need to roll it back, you likely need to include a command to create a backup of the table as part of the forward migration, and restore it as part of the rollback.

In the teams that I have been a part of that used migrations, we never used this functionality. I've been on teams that deployed daily or as slowly as weekly, and our approach instead was to make gradual changes. If we needed to drop a table or do some other highly destructive operation, we first deployed code that no longer used that table. After we were satisfied that we were safe to drop the table, we would finally deploy the DROP TABLE script. Or, going further back in time, we used to take the approach of "storage is cheap, don't ever drop anything". But, sometimes it is necessary to refactor a database, maybe to better normalize it, and this involves moving data from one table to another. Again, in these situations we would make very gradual changes before finally dropping any remnants of the old structure. And, at least as far as I can recall, for the three companies I've worked with that used migrations, we did not encounter problems with this slow, conservative approach to database evolution.

Run Migrations Outside of your Production Application

I've been on teams that will run migrations as part of the application startup process. Spring Boot, for example, supports doing this for Flyway and Liquibase. While this is very convenient, it has a major security drawback - your application has access to credentials that likely give it full DDL control over your database. Maybe that is an acceptable risk depending on your application. But, the safer approach would be to run migrations before rolling out your code, likely using the CLI versions of these tools. This way your application only has the DML permissions that it needs. This does usually require some additional setup on your CI/CD stack, but the security benefit is likely worth it.

Final Thoughts

These are just some practices that have worked well for me and the teams I have been a part of. The most valuable thing though has been using database migrations to begin with. I certainly got tired of checking on rollout "Hey, did script 2015-01-01-add-table-x.sql get run on production yet?", or worse having to work with a DBA elsewhere in the building to coordinate running these scripts.

Have you used one of these tools, or one for another language? Are there tips you would like to share?

Nice and pragmatic writeup. You may also take a look at https://bytebase.com. It’s a more comprehensive database development toolset. (Disclaimer: I am one of the authors)

I love using Flyway. I never considered separating a Spring Boot application from the database migrations. But I could see how there would be benefits to this.

Hey friend!

Yes, I would argue for keeping them separate from a permissions standpoint. I recall a project I worked on where one developer had set up the local stack to mimic this behavior – creating separate DML and DDL users on the local Docker stack to make sure this even was done in the local environment.

The fact that Spring Boot integrates really well with Flyway and Liquibase makes it very easy to quickly bootstrap a project using those technologies, and in the early phases of getting a project going it might make sense to use those integrations. One other pain I have noticed when using these is that at least with Liquibase, it adds a lot of time to the integration testing phase, as the migrations have to be re-run or verified every time a Spring context is initalized. If you use Mock Beans, or do anything else that would invalidate a context, this can cause unnecessary slowdowns when running tests. I personally prefer running migrations and getting the test database to a working state before the tests are executed, and use built-in transaction handling to ensure the database is restored to that working state.